安卓mediasoup webrtc h264 软编解码相关源码分析

- mediasoup H264 支持

- 安卓 webrtc 视频采集流程源码分析

- 安卓 mediasoup 为啥没有使用H264硬编解码

- mediasoup-client-android 中 VideoStreamEncoder 初始化

- webrtc 中 VideoStreamEncoder 初始化

- 解码器 H264Encoder 创建

- mediasoup H264Encoder 初始化

- webrtc H264Encoder 初始化

- webrtc H264Decoder初始化流程

- mediasoup 信令过程

- openh264 提供编解码相关函数

- webrtc 视频 H264 硬编码

- webrtc 视频流显示

本文首发地址 https://h89.cn/archives/250.html

最新更新地址 https://gitee.com/chenjim/chenjimblog

本文基于libmediasoupclient 3.2.0 和 webrtc branch-heads/4147(m84)

本文得熟悉相关基础,参考 文1 和 文2

mediasoup H264 支持

-

打开

rtc_use_h264

在webrtc.gni中可以看到如下,也就是安卓默认不支持 h264

rtc_use_h264 = proprietary_codecs && !is_android && !is_ios && !(is_win && !is_clang)

可以将此处改为true,也可以带上编译参数'rtc_use_h264=true,如下:

./tools_webrtc/android/build_aar.py --extra-gn-args 'rtc_use_h264=true' -

mediasoup-demo-android 的 forceH264

通过代码我们看到,这个参数只在UrlFactory.java中拼接地址使用

正常情况 如果 url 中 有forceH264=true, 就应该采用H264编码

如浏览器输入https://v3demo.mediasoup.org/?forceH264=true&roomId=123456输出的视频就是H264

(安卓默认显示黑屏,缺少H264解码器,另文 解决)

但是mediasoup-demo-android菜单勾选forceH264了还是VP8编码,算是个BUG ...

安卓 webrtc 视频采集流程源码分析

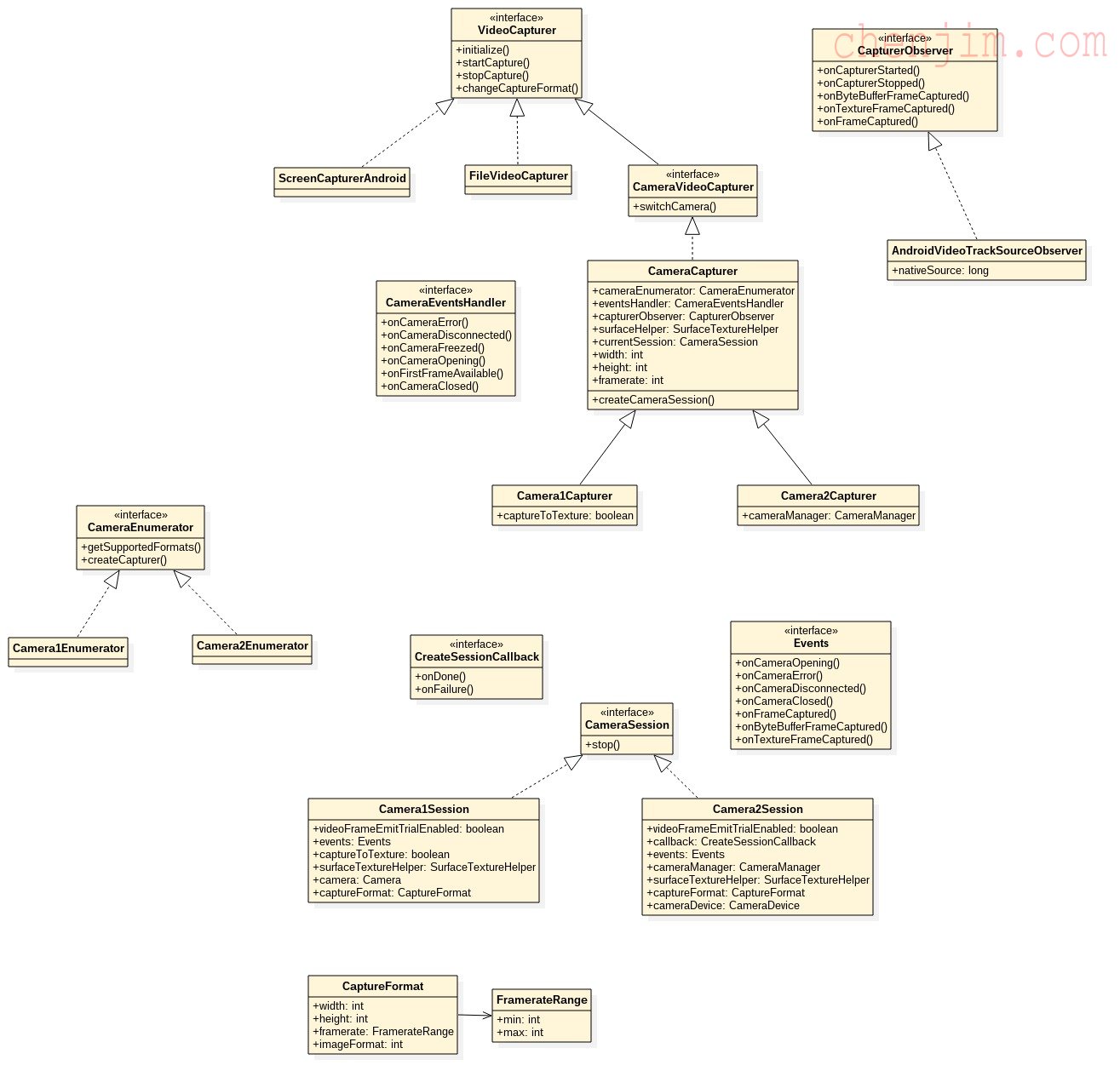

webrtc针对视频采集对外主要提供的是VideoCapturer接口,实现类有ScreenCapturerAndroid、FileVideoCapturer和CameraCapturer,分别表示屏幕、文件、摄像头三种不同的视频来源,因为android系统先后提供了camera1.0和camera2.0接口,因此CameraCapturer又用Camera1Capturer和Camera2Capturer两个子类分别表示。

主要类图

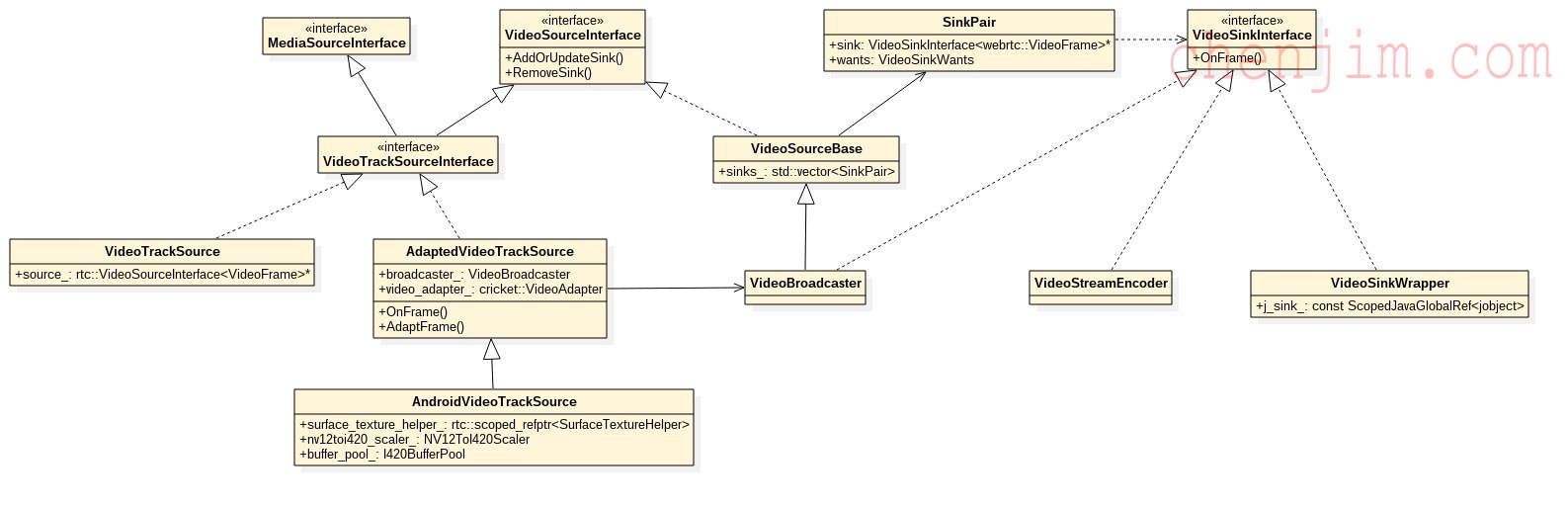

视频采集和分发流程如下图

更多细节可以参考原文 webrtc源码分析之视频采集之一, 感谢 Jimmy2012

安卓 mediasoup 为啥没有使用H264硬编解码

-

安卓 mediasoup Java层 CreateMediaEngine 如下

mediasoup-demo-android\app\src\main\java\org\mediasoup\droid\lib\PeerConnectionUtils.java中VideoEncoderFactory encoderFactory = new DefaultVideoEncoderFactory( mEglBase.getEglBaseContext(), true /* enableIntelVp8Encoder */, true); VideoDecoderFactory decoderFactory = new DefaultVideoDecoderFactory(mEglBase.getEglBaseContext()); mPeerConnectionFactory = builder.setAudioDeviceModule(adm) .setVideoEncoderFactory(encoderFactory) .setVideoDecoderFactory(decoderFactory) .createPeerConnectionFactory();大致跟 AppRTCDemo PeerConnectionClient.java 相同,AppRTCDemo 支持H264软硬编解码,

其中.createPeerConnectionFactory();相关流程如下--> createPeerConnectionFactory() --> JNI_PeerConnectionFactory_CreatePeerConnectionFactory(...) (webrtc/src/sdk/android/src/jni/pc/peer_connection_factory.cc) --> CreatePeerConnectionFactoryForJava(...) (webrtc/src/sdk/android/src/jni/pc/peer_connection_factory.cc) CreatePeerConnectionFactoryForJava(...){} media_dependencies.task_queue_factory = dependencies.task_queue_factory.get(); media_dependencies.adm = std::move(audio_device_module); media_dependencies.audio_encoder_factory = std::move(audio_encoder_factory); media_dependencies.audio_decoder_factory = std::move(audio_decoder_factory); media_dependencies.audio_processing = std::move(audio_processor); //分别是PeerConnectionUtils.java 传入的 encoderFactory 和 decoderFactory media_dependencies.video_encoder_factory = absl::WrapUnique(CreateVideoEncoderFactory(jni, jencoder_factory)); media_dependencies.video_decoder_factory = absl::WrapUnique(CreateVideoDecoderFactory(jni, jdecoder_factory)); dependencies.media_engine = cricket::CreateMediaEngine(std::move(media_dependencies)); } -

安卓 mediasoup Native 层 CreateMediaEngine 如下

Handler::GetNativeRtpCapabilities (libmediasoupclient/src/Device.cpp) --> std::unique_ptr<PeerConnection> pc(new PeerConnection(privateListener.get(), peerConnectionOptions)) (libmediasoupclient/src/Handler.cpp) --> webrtc::CreateBuiltinVideoEncoderFactory (libmediasoupclient/src/PeerConnection.cpp) --> webrtc::CreatePeerConnectionFactory --> cricket::CreateMediaEngine(std::move(media_dependencies)) (webrtc/src/api/create_peerconnection_factory.cc) --> CreateMediaEngine (webrtc/src/media/engine/webrtc_media_engine.cc) --> WebRtcVideoEngine::WebRtcVideoEngine (webrtc/src/media/engine/webrtc_media_engine.cc)

从 libmediasoupclient/src/PeerConnection.cpp 中 PeerConnection 构造函数部分代码如下,我们可以看到音视频编解码器的创建工厂

this->peerConnectionFactory = webrtc::CreatePeerConnectionFactory(

this->networkThread.get(),

this->workerThread.get(),

this->signalingThread.get(),

nullptr /*default_adm*/,

webrtc::CreateBuiltinAudioEncoderFactory(),

webrtc::CreateBuiltinAudioDecoderFactory(),

webrtc::CreateBuiltinVideoEncoderFactory(),

webrtc::CreateBuiltinVideoDecoderFactory(),

nullptr /*audio_mixer*/,

nullptr /*audio_processing*/);这里的 CreateBuiltin...Factory 也传到 CreateMediaEngine

从上面 1 2 可以看到 CreateMediaEngine 传入了不同的 Factory,而且实际编解码使用的是后者,参见后文

mediasoup-client-android 中 VideoStreamEncoder 初始化

后文前提是已经能够 使用H264软编码

调用堆栈如下

private void onNewConsumer(...) (org.mediasoup.droid.lib.RoomClient.java)

--> public Consumer consume(...) (org.mediasoup.droid.RecvTransport.java)

--> Java_org_mediasoup_droid_RecvTransport_nativeConsume (mediasoup-client-android\mediasoup-client\src\main\jni\transport_jni.cpp)

--> Consumer* RecvTransport::Consume(...) (libmediasoupclient/src/Transport.cpp)

--> RecvHandler::RecvResult RecvHandler::Receive(...) (libmediasoupclient/src/Handler.cpp)

--> this->pc->SetRemoteDescription(PeerConnection::SdpType::OFFER, offer)

--> PeerConnection::SetRemoteDescription(...) (webrtc/src/pc/peer_connection.cc)

--> PeerConnection::DoSetRemoteDescription(...)

--> PeerConnection::ApplyRemoteDescription(...)

--> PeerConnection::UpdateSessionState(...)

--> PeerConnection::PushdownMediaDescription(...)

--> BaseChannel::SetRemoteContent(...) (webrtc/src/pc/channel.cc)

--> VoiceChannel::SetRemoteContent_w(...)

--> WebRtcVideoChannel::SetSendParameters (webrtc/src/media/engine/webrtc_video_engine.cc)

--> WebRtcVideoChannel::ApplyChangedParams(...)

--> WebRtcVideoChannel::WebRtcVideoSendStream::SetSendParameters(...)

--> WebRtcVideoChannel::WebRtcVideoSendStream::SetCodec(..)

--> WebRtcVideoChannel::WebRtcVideoSendStream::RecreateWebRtcStream(...)

--> webrtc::VideoSendStream* Call::CreateVideoSendStream(,) (webrtc/src/call/call.cc)

--> webrtc::VideoSendStream* Call::CreateVideoSendStream(,,)

--> VideoSendStream::VideoSendStream(..) (webrtc/src/video/video_send_stream.cc)

--> std::unique_ptr<VideoStreamEncoderInterface> CreateVideoStreamEncoder(..) (webrtc/src/api/video/video_stream_encoder_create.cc)

--> VideoStreamEncoder::VideoStreamEncoder(...) (webrtc/src/video/video_stream_encoder.cc) webrtc 中 VideoStreamEncoder 初始化

这里基于应用 AppRTCDemo,并在应用中开启H264编解码,相关调用堆栈及说明如下

onCreateSuccess() (src/main/java/org/appspot/apprtc/PeerConnectionClient.java)

--> peerConnection.setLocalDescription(sdpObserver, newDesc)

--> Java_org_webrtc_PeerConnection_nativeSetLocalDescription(...)

(./out/release-build/arm64-v8a/gen/sdk/android/generated_peerconnection_jni/PeerConnection_jni.h)

--> PeerConnection::SetLocalDescription (pc/peer_connection.cc)

--> PeerConnection::DoSetLocalDescription

--> PeerConnection::ApplyLocalDescription

--> PeerConnection::UpdateSessionState

--> PeerConnection::PushdownMediaDescription() (pc/peer_connection.cc)

--> BaseChannel::SetLocalContent() (pc/channel.cc)

--> VideoChannel::SetLocalContent_w(...)

--> BaseChannel::UpdateLocalStreams_w(..)

--> WebRtcVideoChannel::AddSendStream(..) (media/engine/webrtc_video_engine.cc)

--> WebRtcVideoChannel::WebRtcVideoSendStream::WebRtcVideoSendStream(..)

--> WebRtcVideoChannel::WebRtcVideoSendStream::SetCodec(..)

--> WebRtcVideoChannel::WebRtcVideoSendStream::RecreateWebRtcStream()

--> webrtc::VideoSendStream* Call::CreateVideoSendStream(..) (call/call.cc)

--> VideoSendStream::VideoSendStream(..) (video/video_send_stream.cc)

--> std::unique_ptr<VideoStreamEncoderInterface> CreateVideoStreamEncoder(..) (api/video/video_stream_encoder_create.cc)

--> VideoStreamEncoder::VideoStreamEncoder() (video/video_stream_encoder.cc) 从上面两小节可以看到:mediasoup 和 webrtc VideoStreamEncoder 创建有稍微差别

解码器 H264Encoder 创建

视频帧分发 到

VideoStreamEncoder::OnFrame (webrtc/src/video/video_stream_encoder.cc)

--> VideoStreamEncoder::ReconfigureEncoder()

--> encoder_ = settings_.encoder_factory->CreateVideoEncoder(encoder_config_.video_format)

(webrtc/src/video/video_stream_encoder.cc)

//这里的 `settings_` 是在上步创建时赋值,会影响后续 `Encode` 的创建 -

如下是 mediasoup 中 H264 Encode 的调用栈堆

--> internal_encoder = std::make_unique<EncoderSimulcastProxy>(internal_encoder_factory_.get(), format) (webrtc\src\api\video_codecs\builtin_video_encoder_factory.cc) --> EncoderSimulcastProxy::EncoderSimulcastProxy (webrtc\src\media\engine\encoder_simulcast_proxy.cc) --> InternalEncoderFactory::CreateVideoEncoder (webrtc/src/media/engine/internal_encoder_factory.cc) --> H264Encoder::Create() (webrtc/src/modules/video_coding/codecs/h264/h264.cc) --> H264EncoderImpl::H264EncoderImpl (webrtc/src/modules/video_coding/codecs/h264/h264_encoder_impl.cc) -

如下是 webrtc AppRTCDemo 中 调用栈堆

--> VideoEncoderFactoryWrapper::CreateVideoEncoder (sdk/android/src/jni/video_encoder_factory_wrapper.cc) --> Java_VideoEncoderFactory_createEncoder() (./out/release-build/arm64-v8a/gen/sdk/android/generated_video_jni/VideoEncoderFactory_jni.h) //这里会调用Java层代码 VideoEncoderFactory 的 createEncoder(..) //我在应用中开启了硬编码和H264 Base编解码,所以 DefaultVideoEncoderFactory 是 VideoEncoderFactory 的实现,进而返回 VideoEncoderFallback (...) --> VideoEncoderFallback(VideoEncoder fallback, VideoEncoder primary) (sdk/android/api/org/webrtc/VideoEncoderFallback.java) --> public long createNativeVideoEncoder() (sdk/android/api/org/webrtc/VideoEncoderFallback.java) --> JNI_VideoEncoderFallback_CreateEncoder(...) (sdk/android/src/jni/video_encoder_fallback.cc) --> CreateVideoEncoderSoftwareFallbackWrapper() (api/video_codecs/video_encoder_software_fallback_wrapper.cc) //VideoEncoderSoftwareFallbackWrapper 是最终的 Encode --> VideoEncoderSoftwareFallbackWrapper::VideoEncoderSoftwareFallbackWrapper(..) (api/video_codecs/video_encoder_software_fallback_wrapper.cc) //JNI_VideoEncoderFallback_CreateEncoder 函数 如下 static jlong JNI_VideoEncoderFallback_CreateEncoder(...) { //通过 JavaToNativeVideoEncoder 和 传入的 j_fallback_encoder 创建一个软解码器 std::unique_ptr<VideoEncoder> fallback_encoder = JavaToNativeVideoEncoder(jni, j_fallback_encoder); //通过 JavaToNativeVideoEncoder 和 传入的 j_primary_encoder 创建一个硬解码器 //会调用到 DefaultVideoEncoderFactory.java 中 createEncoder std::unique_ptr<VideoEncoder> primary_encoder = JavaToNativeVideoEncoder(jni, j_primary_encoder); //将软编码器和硬编码器传入 CreateVideoEncoderSoftwareFallbackWrapper VideoEncoder* nativeWrapper = CreateVideoEncoderSoftwareFallbackWrapper(std::move(fallback_encoder),std::move(primary_encoder)) .release(); return jlongFromPointer(nativeWrapper); } // 函数 VideoEncoderFactoryWrapper::CreateVideoEncoder 如下 std::unique_ptr<VideoEncoder> VideoEncoderFactoryWrapper::CreateVideoEncoder( const SdpVideoFormat& format) { JNIEnv* jni = AttachCurrentThreadIfNeeded(); ScopedJavaLocalRef<jobject> j_codec_info = SdpVideoFormatToVideoCodecInfo(jni, format); ScopedJavaLocalRef<jobject> encoder = Java_VideoEncoderFactory_createEncoder(jni, encoder_factory_, j_codec_info); if (!encoder.obj()) return nullptr; return JavaToNativeVideoEncoder(jni, encoder); } //在 VideoEncoderFactoryWrapper::CreateVideoEncoder 中可以看到 以上 encode 接着传入 JavaToNativeVideoEncoder std::unique_ptr<VideoEncoder> JavaToNativeVideoEncoder(..) Java_VideoEncoder_createNativeVideoEncoder(..) (out/release-build/arm64-v8a/gen/sdk/android/generated_video_jni/VideoEncoder_jni.h) createNativeVideoEncoder() (sdk/android/api/org/webrtc/VideoEncoder.java) //这里返回到了java层,而 VideoEncoder 只是一个接口, //通过 DefaultVideoEncoderFactory.java 中 createEncoder 可以看到这里用的是 VideoEncoderFallback.java Java_org_webrtc_VideoEncoderFallback_nativeCreateEncoder (out/release-build/arm64-v8a/gen/sdk/android/generated_video_jni/VideoEncoderFallback_jni.h) JNI_VideoEncoderFallback_CreateEncoder(...) (sdk/android/src/jni/video_encoder_fallback.cc)相关类的关系图如下

另:在 internal_encoder_factory.cc 中我们可以看到有 V8 V9 H264 AV1 Encoder 的创建,如下:

std::unique_ptr<VideoEncoder> InternalEncoderFactory::CreateVideoEncoder(const SdpVideoFormat& format) {

if (absl::EqualsIgnoreCase(format.name, cricket::kVp8CodecName))

return VP8Encoder::Create();

if (absl::EqualsIgnoreCase(format.name, cricket::kVp9CodecName))

return VP9Encoder::Create(cricket::VideoCodec(format));

if (absl::EqualsIgnoreCase(format.name, cricket::kH264CodecName))

return H264Encoder::Create(cricket::VideoCodec(format));

if (kIsLibaomAv1EncoderSupported &&absl::EqualsIgnoreCase(format.name, cricket::kAv1CodecName))

return CreateLibaomAv1Encoder();

return nullptr;

}mediasoup H264Encoder 初始化

视频帧分发到

VideoStreamEncoder::OnFrame (webrtc/src/video/video_streamencoder.cc)

ReconfigureEncoder()

--> encoder->InitEncode(...)

--> EncoderSimulcastProxy::InitEncode(...) (webrtc/src/media/engine/encoder_simulcast_proxy.cc)

--> H264EncoderImpl::InitEncode(...) (webrtc/src/modules/video_coding/codecs/h264/h264_encoder_impl.cc)

其中还会调用 H264EncoderImpl::SetRates、H264EncoderImpl::CreateEncoderParams 等

H264Encoder 编码视频帧分发到

VideoStreamEncoder::OnFrame (webrtc/src/video/video_streamencoder.cc)

--> MaybeEncodeVideoFrame

--> VideoStreamEncoder::EncodeVideoFrame

--> encoder->Encode(...)

--> H264EncoderImpl::Encode (webrtc/src/modules/video_coding/codecs/h264/h264_encoder_impl.cc)

--> encoded_imagecallback->OnEncodedImage 编码完成回调

webrtc H264Encoder 初始化

VideoStreamEncoder::OnFrame (webrtc/src/video/video_stream_encoder.cc)

VideoStreamEncoder::MaybeEncodeVideoFrame

VideoStreamEncoder::ReconfigureEncoder()

VideoEncoderSoftwareFallbackWrapper::InitEncode (api/video_codecs/video_encoder_software_fallback_wrapper.cc)

VideoEncoderWrapper::InitEncode (sdk/android/src/jni/video_encoder_wrapper.cc)

VideoEncoderWrapper::InitEncodeInternal

Java_VideoEncoderWrapper_createEncoderCallback (out/release-build/arm64-v8a/gen/sdk/android/generated_video_jni/VideoEncoderWrapper_jni.h)

//最终使用 JAVA 层 HardwareVideoEncoder

public VideoCodecStatus initEncode(Settings settings, Callback callback) (sdk/android/src/java/org/webrtc/HardwareVideoEncoder.java)webrtc H264Decoder初始化流程

VideoReceiver2::Decode (modules/video_coding/video_receiver2.cc)

-->

VCMDecoderDataBase::GetDecoder (modules/video_coding/decoder_database.cc)

-->

H264DecoderImpl::InitDecode (modules/video_coding/codecs/h264/h264_decoder_impl.cc)

-->

avcodec_find_decoder (third_party/ffmpeg/libavcodec/allcodecs.c)

其中 av_codec_iterate 会用到 codec_list(在 libavcodec/codec_list.c)

也就是为啥 开启h264软编解码 需要修改此处

mediasoup 信令过程

参考链接

https://www.cnblogs.com/WillingCPP/p/13646225.html

openh264 提供编解码相关函数

WelsCreateDecoder;

WelsCreateSVCEncoder;

WelsDestroyDecoder;

WelsDestroySVCEncoder;

WelsGetCodecVersion;

WelsGetCodecVersionEx;使用 openh264 编解码示例

https://blog.csdn.net/NB_vol_1/article/details/103376649

webrtc 视频 H264 硬编码

安卓设备由于碎片化,早期的版本并不支持硬编码,又存在不同的芯片厂商如高通、MTK、海思、三星等

最终并不是所有安卓设备都支持硬编解码

-

修改

MediaCodecUtils.java中SOFTWARE_IMPLEMENTATION_PREFIXES

建议把"OMX.SEC."去掉

因为在HardwareVideoDecoderFactory.java和PlatformSoftwareVideoDecoderFactory中,

MediaCodecUtils.SOFTWARE_IMPLEMENTATION_PREFIXES包含芯片厂家被加不支持硬编码黑名单了。。。 -

支持的 H264 的芯片代码前缀如下,参考自 一朵桃花压海棠 博文

private static final String[] supportedH264HwCodecPrefixes = { "OMX.qcom.", "OMX.Intel.", "OMX.Exynos." ,"OMX.Nvidia.H264." /*Nexus 7(2012), Nexus 9, Tegra 3, Tegra K1*/ ,"OMX.ittiam.video." /*Xiaomi Mi 1s*/ ,"OMX.SEC.avc." /*Exynos 3110, Nexus S*/ ,"OMX.IMG.MSVDX." /*Huawei Honor 6, Kirin 920*/ ,"OMX.k3.video." /*Huawei Honor 3C, Kirin 910*/ ,"OMX.hisi." /*Huawei Premium Phones, Kirin 950*/ ,"OMX.TI.DUCATI1." /*Galaxy Nexus, Ti OMAP4460*/ ,"OMX.MTK.VIDEO." /*no sense*/ ,"OMX.LG.decoder." /*no sense*/ ,"OMX.rk.video_decoder."/*Youku TVBox. our service doesn't need this */ ,"OMX.amlogic.avc" /*MiBox1, 1s, 2. our service doesn't need this */ }; -

修改

HardwareVideoEncoderFactory.java和HardwareVideoDecoderFactory相关代码以支持更多芯片

如需暴力修改可以参考 https://www.pianshen.com/article/63171561802/ -

近期看到一份少入侵的修改方式,

即依据HardwareVideoEncoderFactory创建自定义编解码器CustomHardwareVideoEncoderFactory,

具体实现可以参见 https://github.com/shenbengit/WebRTCExtension

结果会存在实际设备并不支持的情况

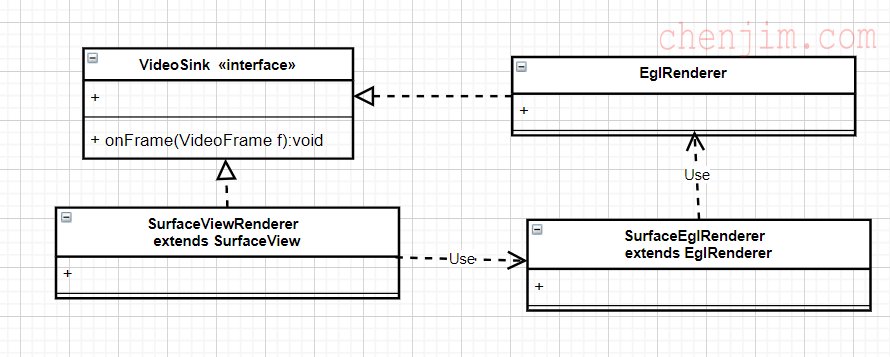

webrtc 视频流显示

通过 CallActivity.java 可以看到,用 SurfaceViewRenderer.java 显示 VideoFrame

SurfaceViewRenderer 使用了 SurfaceEglRenderer.java

相关类关系如下图

其它相关文档

-

WebRTC on Android: how to enable hardware encoding on multiple devices

https://medium.com/bumble-tech/webrtc-on-android-how-to-enable-hardware-encoding-on-multiple-devices-5bd819c0ce5

本文链接:安卓mediasoup webrtc h264 软编解码相关源码分析 - https://h89.cn/archives/250.html

版权声明:原创文章 遵循 CC 4.0 BY-SA 版权协议,转载请附上原文链接和本声明。

评论已关闭